Contrastive Language-Image Pre-Training (CLIP): A Comprehensive Overview📐

In machine learning, Contrastive Language-Image Pre-Training (CLIP) has gotten a lot of interest because of its capacity to connect language and vision models.

OpenAI developed Contrastive Language-Image Pre-Training (CLIP), designed to learn from extensive, diverse datasets of images and text in an unsupervised manner.[1] This approach helps the model generalize tasks without needing large amounts of task-specific labeled data, making it highly scalable and adaptable.

This guide will help you understand CLIP’s core aspects, underlying mechanisms, applications, strengths, and limitations and examine its impact on the future of AI models that integrate language and vision.

1. What is CLIP: An Overview

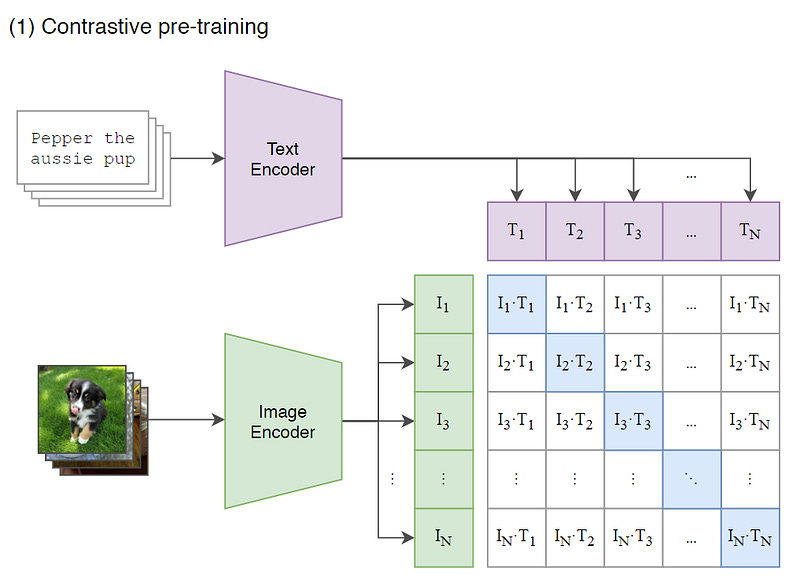

Contrastive Language-Image Pre-Training (CLIP) is a learning network that trains a model using pairs of images and corresponding text descriptions. Instead of training on individual labels or a single task, CLIP learns from a wide range of image-text pairs sourced from the web. The primary goal of CLIP is to create a model that can understand the relationship between language and visual data and generalize to new, unseen tasks.[2]

CLIP differs from traditional image classification models, which rely on supervised learning and large, task-specific datasets. Instead, CLIP uses contrastive learning to associate images and text based on semantic similarities. This approach allows CLIP to perform various tasks out of the box, including zero-shot classification, image retrieval, and more.[3]

MLOps Implementation Guide eBook👇

MLOps Implementation Guide: Beginner to Advanced

2. How Does CLIP Work

To better understand CLIP, it is important to break down its two main components: the vision encoder and the language encoder.

2.1 Vision Encoder

The vision encoder processes images and extracts visual features. It uses a convolutional neural network (CNN) or a transformer-based architecture (like Vision Transformers or ViTs) to represent an image as a high-dimensional feature vector (images to numerical representation).[4] This feature vector represents the image in the shared semantic space.

The goal is to create a representation of the image which reflects the most important visual details, so it’s easier to match the image to its text description.

2.2 Language Encoder

This component processes text, understanding the meaning and context of words and sentences.[5] It based on a transformer architecture (such as BERT or GPT-style models), processes text descriptions of images and maps them into a feature space.[6] Like the vision encoder, the language encoder generates a high-dimensional vector representing the semantics of the text.

The most important part is when these two encoders map images and text into a shared embedding space. In this space, CLIP uses a technique called contrastive learning to align images with their corresponding textual descriptions.[7] It learns to maximize the similarity between matching image-text pairs while pushing apart unrelated ones.[8]

3. Pre-Training and Fine-Tuning in CLIP

CLIP can generalize across multiple tasks due to its large-scale pre-training. Let’s break down its pre-training and fine-tuning processes:

3.1 Pre-Training on Diverse Data

CLIP’s pre-training start with feeding the model with vast dataset of image-text pairs. These pairs are collected from the web, ensuring diverse objects, scenes, and concepts. Unlike traditional supervised learning models requiring labeled datasets for specific tasks, CLIP can learn from noisy, unstructured data, much like humans learn from everyday experiences.

During pre-training, CLIP learns to understand the relationship between images and their associated text through the contrastive learning process. The diverse nature of the data helps CLIP to build a robust understanding of both visual and linguistic patterns and helping to improved generalization capabilities.

3.2 Zero-Shot Learning with CLIP

One of CLIP’s most significant advantages is its ability to perform zero-shot learning.[9] In this context, zero-shot learning means that the model can handle new tasks without additional training or fine-tuning on task-specific data.

This is possible because CLIP’s pre-training equips it with a rich understanding of how language and images relate.

3.3 Fine-Tuning for Specific Tasks

While CLIP is highly effective in zero-shot learning, it can also be fine-tuned for specific tasks. Fine-tuning include adjusting the model’s parameters based on a smaller, task-specific dataset to improve performance on that particular task. Fine-tuning can help optimize CLIP’s performance in specialized domains such as medical imaging, autonomous driving, or video analysis, where domain-specific data may offer additional benefits.

4. Applications of CLIP

Below are some of the key applications of CLIP across different fields:

Image Classification: From identifying different types of flowers to detecting harmful content in images, CLIP can classify images into various categories without needing labeled datasets.[10]

Image Retrieval: CLIP can power search engines that allow users to find images based on textual descriptions, like a photo of a sunset over a mountain range.[10]

Content Creation: CLIP is used in AI art generators like DALL-E 2, allowing users to create stunning images from text prompts.[12] Imagine typing “a futuristic city with flying cars and holographic advertisements” and having an AI generate a picture matching that description.

Multimodal Learning: CLIP enables AI systems to combine visual and textual information for more comprehensive understanding, such as in autonomous vehicles that need to interpret both traffic signs and road conditions.[13]

Challenges and Future Directions

While CLIP has impressive capabilities but it still faces challenges like potential biases inherited from its training data and the need for significant computational resources.[14] However, ongoing research is addressing these issues, aiming to create more robust, fair, and efficient CLIP models.

CLIP represents a significant step towards building AI systems that can truly understand and interact with the world in a multimodal way. As research progresses, we can expect even more innovative applications of CLIP and similar models, leading to AI that can seamlessly integrate vision and language, opening up new possibilities in various fields.

Hands-on Open AI’s CLIP Model For Fashion Industry👉

Activation Function Series👇

1. Softmax activation function

2. Sigmoid / Logistic activation function

3. Hyperbolic Tangent (Tanh) Activation Function